There are many complicated places in our world. A supermarket is a complicated place. Where do you position cereal, for example, as opposed to soup? Meat as opposed to milk? How do you entice people to buy products they are unfamiliar with? If you make a mistake running a supermarket, your margin of profit may suffer, but no one dies.

Not so with another very complicated place, a battlefield. If you make mistakes out there, people – your people, even you – could die. It’s probably why wars are so terribly transfixing, why reading about them, or watching television reporting of wars, draws our attention so strongly. We are able to imagine ourselves into a war without being there, but what is it we are imagining, exactly? Well, what we see in wars is the instinct for self-preservation played out in real time with real consequences. Wars, even so-called bad wars we have gotten ourselves into for bogus reasons, even wars based on lies, like Vietnam, or Iraq, are still at their roots about life and death. If you fight a battle and lose, many people on your side are going to die. If you fight a battle and win, more people on your side will live.

If you get involved in an existential war against an aggressor nation, and you lose, not only will many of your citizens die, your nation will cease to exist. Self-preservation thus becomes an issue not only for individuals, but for individuals as citizens, as the collective force that makes a nation. That is where Ukraine finds itself right now, at war for its survival against a nation, Russia, which has invaded its land with the express purpose of ending Ukraine’s existence.

That raises the question, how far would you go in preserving yourself as an individual and as a nation? We just had a big argument amongst ourselves as Americans over the issue of sending cluster munitions, mainly in the form of artillery shells, to Ukraine for use in their fight against Russian aggressors. Those opposing sending cluster munitions to Ukraine argued that unexploded bomblets on the battlefield have been known to injure or kill innocent civilians long after wars are over. There is even an international treaty against the use of cluster munitions for that very reason, signed by more than 130 nations, but not by Russia or the United States or Ukraine.

It is true that cluster munitions of the kind we sent to Ukraine are terrible things. Unexploded cluster bomblets are killing civilians, including children, wherever they have been used all over the world – in Syria and in Yemen, to give just two examples. Ukraine asked for cluster munitions making the argument that their war against Russian aggression is truly existential. If they do not win, Ukraine will cease to exist. Whole parts of Ukraine already do not exist. We have all seen the photos of entire towns like Mariupol and Bakhmut reduced to rubble, and for every photo of a ruined town we have seen, dozens more exist all over eastern and southern Ukraine. We’ve seen satellite photos of fields once used to grow crops like wheat and soybeans pock marked by hundreds, sometimes many hundreds, of craters from artillery shells that missed their mark. It will be years before those fields are restored to agricultural use.

So where do you draw the line on the infinite scale of terrible that is warfare? History tells us that it is impossible. Every advance in warfare has made war more terrible. What follows is by no means a comprehensive account of warfare and its machinery. I am certain to omit some so-called “advances” in the manner and with what machines wars have been fought. But here’s a brief shot at recounting the multi-millennia-long journey of human beings’ developing new and better ways to kill one another.

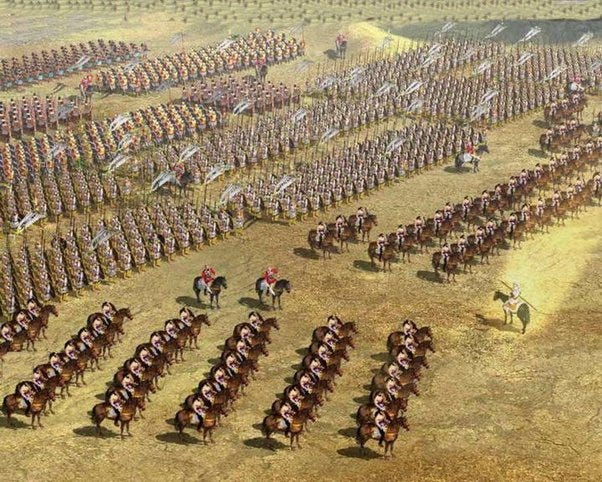

Ancient armies like Alexander’s and Caesar’s fought what we now call hand-to-hand combat, with clubs and swords and lances and knives. They lined up and charged and whichever army killed the most of the other’s soldiers won. Bows and arrows allowed armies to project power beyond individual soldiers, but they were still hand-operated and powered. The invention of gunpowder by the Chinese in the 1200’s (as close as historians can place it) enabled the invention of things like the fire lance and the hand cannon and the bombard, a muzzle-loaded mortar-like cannon that fired stones and metal balls and materials that were set on fire.

On it went from there. The hand cannon became a gun firing metal balls and then bullets. The bombard became a cannon firing explosive metal balls. The 155 mm Howitzers in use today in Ukraine by both sides in the war are technological advances on the bombard, but they do the same thing: they project death and destruction beyond the reach of an arm or an arrow. The same is true for the AK-47 rifle, centuries away from the hand cannon, but for all intents and purposes, an incremental advance on the previous, primitive weapon.

For every advance in weaponry, there was another advance to improve its effectiveness and accuracy and deadliness. Muzzle-loading rifles became breech-loaders. Breech loaders became repeaters. Repeaters gained clips of ammunition and then magazines to carry even more bullets and could be fired semiautomatically – a bullet fired every time you pulled the trigger without the necessity of cocking the rifle. The first military rifle I fired was an M-1 that held a clip. The next, the M-14, held a small magazine. The next, the M-16, held a larger magazine and fired both semi-automatically and automatically. Machine guns went from spinning Gatling guns to gas-operated belt-fed machines firing automatically when you pulled the trigger.

The field of artillery gained accuracy and speed. Today, modern U.S. 155 mm Howitzers can fire what amount to guided munitions. And we haven’t even started on rockets, which went from primitive things that fired into the sky and went who-knew-where, but at least toward the enemy, to guided rockets like the HIMARS we have sent to Ukraine. Rockets carried bigger and bigger warheads and gained in how far they could be fired. They were equipped with specialized warheads that could explode in the air and spray fragments across broad swaths of battlefield and penetrate deep into the ground and explode bunkers and other underground fortifications. Finally, ballistic missiles could be fired across continents and oceans.

And we haven’t even touched on the Atomic bomb, the hydrogen bomb, and the super-ultra terrible nuclear missiles that have proliferated into the many thousands around the globe and are capable, we think, of wiping out life on earth, or enough of it anyway to make going on living untenable.

There were advances in ammunition as well: Tanks went from primitive mobile gun platforms to thundering killing machines firing armor-piercing projectiles against enemy tanks. Then tanks sprouted so-called active armor, cladding on the outside of thick steel tank hulls to repel armored piercing projectiles. Armies even came up with metal cages affixed to armored vehicles like the U.S. Stryker six-wheeled personnel carrier to protect them from shoulder-fired Russian RPG-7’s by causing the warhead to explode before it reached the body of the vehicle.

And of course they had to come up with so-called smart weapons, like smart bombs and smart ground-fired rockets and smart missiles fired from aircraft. Smart munitions could be guided by precision methods to hit targets as small as the top of a small bunker or a single enemy tank. We have smart cruise missiles that fly unmanned under enemy radar defenses for hundreds of miles and accurately destroy targets large and small. And we came up with anti-missile missiles like the Patriot to shoot down enemy missiles, including low-altitude radar-resistant cruise missiles.

The latest so-called advance in warfare is the drone – an unmanned aircraft, either fixed-wing or rotary, used for surveillance or armed with bombs or missiles and used as yet another flying weapon.

All these weapons are being used by Ukraine against Russian invaders, and by Russia in its invasion of Ukraine.

And now the war of escalation in weaponry has reached artificial intelligence (AI) technology, the latest terrible development in the already terrible ways of warfare. The danger, we are told again and again by experts, is that AI contains within it the danger of wiping out human life on the planet. The ways this would happen vary amongst predictors, but a recent op-ed in the New York Times quoted one commentator in the pages of Time Magazine saying, “If somebody builds a too-powerful AI, under present conditions, every single member of the human species and all biological life on Earth dies shortly thereafter.”

Well. That gets your attention, doesn’t it? On every expert’s radar of course is the combination in whatever form of AI technology and warfare. There have already been protests by workers at both Microsoft and Google against defense contracts signed by the technology giants to provide help to the Pentagon, no matter that the help they would provide would be used to defend the good old U.S.A. The possible combination of AI technology and weaponry is claimed to be scary for many reasons, but the one you see most often is the fear of what would happen if “machines” are making decisions on the targeting and firing of the extremely deadly weapons we have in our military arsenal.

It’s kind of like the ultimate projection of force. First, the arrow was able to kill an enemy dozens of feet away from you. Then a bullet could do the same thing hundreds of feet away. An artillery round or a rocket warhead could kill miles away, a missile hundreds of miles away…and on and on and on. What does all of this have in common, including the increasing use of drones? Removing humans as far away from the active battlefield as possible so fewer of them are killed. That has been one of the goals as long as there have been wars.

The ultimate goal of warfare, however, as spelled out by Von Clausewitz and Sun Tzu and other military geniuses, has been to kill as many of the enemy as possible as fast as possible so that the enemy suffers such catastrophic losses that he throws down his weapons and surrenders. The way that has been spelled out by Ukraine is that the enemy, Russia, would leave the Ukrainian lands that it has taken control of and go home.

The Washington Post published an excellent article last week on the advances Ukraine has made in using AI technology in drones to overcome Russian counter-drone warfare measures such as jamming communications between drone operators and drones. Ukraine has apparently developed drone AI software that enables drones to fly to and strike targets accurately even if communication is jammed and GPS guidance of drones disabled. How this would be accomplished was not spelled out exactly, presumably because it is very, very top secret. But enough is known about other forms of guided weapons that some combination of terrain-following technology is combined with target identification software to enable a drone cut loose from controls on the ground to direct itself without contact with a satellite or controller and still manage to destroy its target.

This would no doubt mean AI is being used to “teach” drones to reach targets and how to “see” them. The Washington Post story quotes a drone company executive saying that its AI technology abides by a directive by Chairman of the Joint Chiefs of Staff Mark A. Milley, who has insisted that “humans” remain in the “decision making loop.”

That apparently means that humans select the target, but once that is done, AI technology enables the drone to reach the target and release its munition, or in the case of so-called suicide drones, fly into the target.

It would appear that the AI technology enables the drone to identify its target using parameters programmed into it, but that would be like programming GPS coordinates into a smart weapon so it could be dropped or fly to the target at the coordinates. AI is apparently “teaching” the targeting mechanism in drones in Ukraine to identify targets visually – using photos from previous surveillance of the target, for example – or by parameters which identify targets using other means.

Here is where the humans-in-the-loop thing begins to get fuzzy. If the humans teach the drone to hit targets that have a certain shape common to military targets, like the shape of a tank, for example, that would make sense. But not all targets are that easy to identify. It could be that the AI part of the human-in-the-loop connection is taking the human’s information about targets and using that to identify the targets independently. Say the drone is taught that ammunition dumps have a certain look – the shapes of various ammunitions, the kind of shapes associated with ammunition dumps, such as protective berms or earth-covered concrete bunkers – so the drone sees what it has been taught is an ammunition dump, and it fires its munition.

But what if the ammunition dump is instead a strangely or uniquely designed schoolhouse or hospital?

You see where this is going: right where the people who are afraid of AI infiltration of weaponry say it’s going.

To which all I can say is, just try stopping it. Humans have not been able to stop the development of deadly weaponry that has the ability of killing innocent civilians with primitive bombards and hand cannons all the way to the super-ultra-multi-warhead guided ballistic missile nuclear weapon. Once something as advanced as say, gunpowder, has departed the proverbial Pandora box, there’s no putting it back.

The lesson being, when you think of man’s inherent and inexhaustible capacity for inhumanity to man, we are left with man’s equally inherent and inexhaustible tendency for self-preservation. How far would you be willing to go to defend yourself and your loved ones? Ukraine is willing to risk the future of its civilian population including its children to defend itself against Russians who have killed Ukrainian civilians and children by the thousands already anyway.

Ukraine, and the U.S.A, are willing to develop and deploy AI-enabled military weapons and vehicles in order to save the lives of soldiers in fighting wars against enemies like Russia and potentially China, which is said to be ahead of us in AI weaponry as we speak.

Escalation is a terrible beast, just like the weapons which have historically followed its deadly trajectory. It is left to us, as ever, to make our very human and thus very imperfect attempts to control it.

Lucian,

Thank you for writing this. It is so well laid out and reasoned. We have to one degree or another been able to manage to control the advances in killing technology, at least so far. I am concerned about AI becoming sentient at some point, with or without human guidance. It is something that the writers of Star Trek, especially during and after Star Trek the Next Generation. Season One, Episode 21, “The Arsenal of Freedom” dealt with AI weapons that wiped out their inventors. I was a Medical Service Corps Captain when it came out, fresh from the Fulda Gap where my Ambulance Company was to support the 11th ACR, and if we survived, helped to reconstitute it. I did Iraq with the Advisors all over Al Anbar. I cannot imagine a worse catastrophe than for Ukraine to lose this war, thus everything to support Ukraine winning is needed, even AI. I just hope that we manage to control it in the long run.

Peace,

Steve

The scary part is that what used to be sci-fi escapism during the’60s Cold War years is now becoming reality. It was more fun as a Twilight Zone episode and not the daily news.